Medical AI Tools: What Role Should They Play?

- symthasreeskoganti

- Oct 31, 2025

- 4 min read

Fairfax, VA

Health can be a topic of much concern. From worry about medical conditions to a need for understanding complex terminology to long wait times and high bills, a patient can go through much difficulty in navigating healthcare and seeking solutions for their health. With the recent rise of artificial intelligence (AI), it was only natural that AI-integrated tools would be developed for medical purposes. Still, with concerns rising about what role AI should take in several fields, a similar one rises within that of healthcare: to what extent should AI use be permitted in medicine?

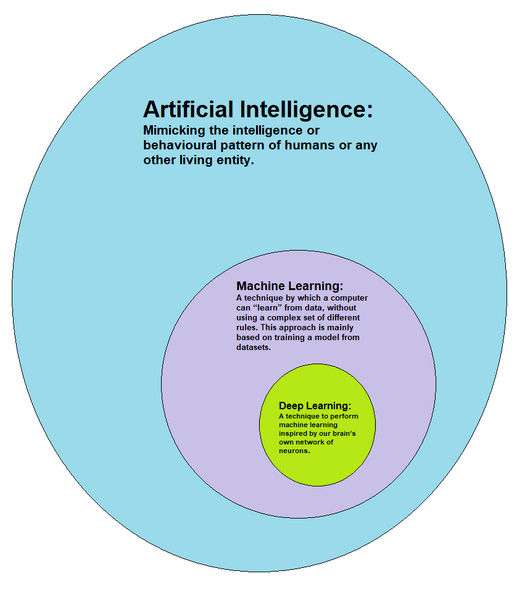

The term “AI” is a broad one, encompassing computer science algorithms and techniques such as large language models (LLMs), machine learning (ML), and more. Due to this and the numerous ways that AI can be integrated within clinical care, AI-integrated tools in healthcare serve a wide variety of purposes as well--not all of which can be mentioned within this blog. Most can, however, be placed within broad categories of diagnosis, treatment, data analytics, patient care, or administrative applications. In addition, the American Medical Association, or AMA, classifies AI usage into three categories depending on the level of physician or health provider involvement. Going from the most external involvement to the least, the levels would be assistive (just detection), augmentative (can quantify and analyze data with health provider interpretation needed), and autonomous (can automatically interpret data for meaningful conclusions without health provider involvement).

When looking at how effective AI tools have been in healthcare, the results are mixed. One particular deep learning algorithm, detailed in a paper published by Seog Han and colleagues in 2020, was trained to classify skin cancer and other skin lesions. Its performance was shown to be on par with trained dermatologists, leading the authors to suggest that it may have a place in selecting treatment plans. Other than simple diagnosis of existing conditions, AI development has expanded, attempting to predict future outcomes. Sheu and colleagues published a 2023 paper detailing outcomes of an AI model developed to predict patient responses to particular antidepressant drugs given their electronic health records (EHRs). Using test metrics of AUROCs (essentially the probability that a predicted outcome is more likely to be correct than incorrect) to assess the model, the value was calculated to be at least 0.70--a relatively good level of prediction considering limitations with EHR data (e.g., amount of information provided differing between patients).

However, not all AI tools have shown such positive outcomes. OpenAI’s 2022 release of an AI transcription tool, named Whisper, was for medical and business settings, assisting in the former in cases such as transcribing staff-patient interactions for records. The company put out information that Whisper had trained on 680,000 hours of audio data and their associated transcripts, as well as statements about the tool’s high accuracy. The company also suggested that Whisper should not be used in high-risk domains. The latter sentence makes more sense when knowing that many reports and researchers have identified Whisper’s tendency to “hallucinate” statements that were not spoken and sometimes erase the audio recordings it transcribes (a concerning loss of evidence in settings like hospitals that depend on clear documentation). While the current extent of Whisper usage in hospitals is uncertain, multiple news outlets in late 2024 covered the thousands of medical professionals using this tool for patient visits even after concerns became public knowledge. This was (and is) genuinely concerning not only for patients who need accurate information about previous visits, even in the future, but also for medical providers who need accurate transcriptions for best practices and care.

AI usage is a bit of a mixed bag. How, then, do we ascertain which AI-integrated tools should be used or what kind stands to best benefit the healthcare systems and all parties that comprise it? The legal considerations of such a question are some of the most important and will likely come to be determined in cases over the next decade or so. At the moment, many of the regulations surrounding AI and the involved tools are a legal gray zone. There is no question that AI technology should be embraced, making the fullest use of a field that will continue to grow in order to lift some burden off overworked healthcare employees. The technology itself should still offer professional accuracy and consistency in its purpose, alleviate some kind of healthcare workload or empower patients in some meaningful manner, and (preferably) be a cost-saving measure. It is also worth considering that applications for direct patient care may benefit from being assistive or augmentative rather than autonomous, despite the potential of saving time. Assisting healthcare professionals (e.g., a secondary opinion to make a diagnosis) would allow for the important human aspect of care to be present for patients--and possibly avoid some legal grey zones if lawsuits arise. In the end, the extent of AI-integrated tools in the future of healthcare may be uncertain, but with careful steps forward in this regard, the future of medicine looks more promising.

Sources

Thought this was interesting? Check out more of our content!

Comments