Can AI ever take on Bioinformatics?

- Dominika Romanik

- Nov 7, 2025

- 9 min read

Lake Orion, Michigan

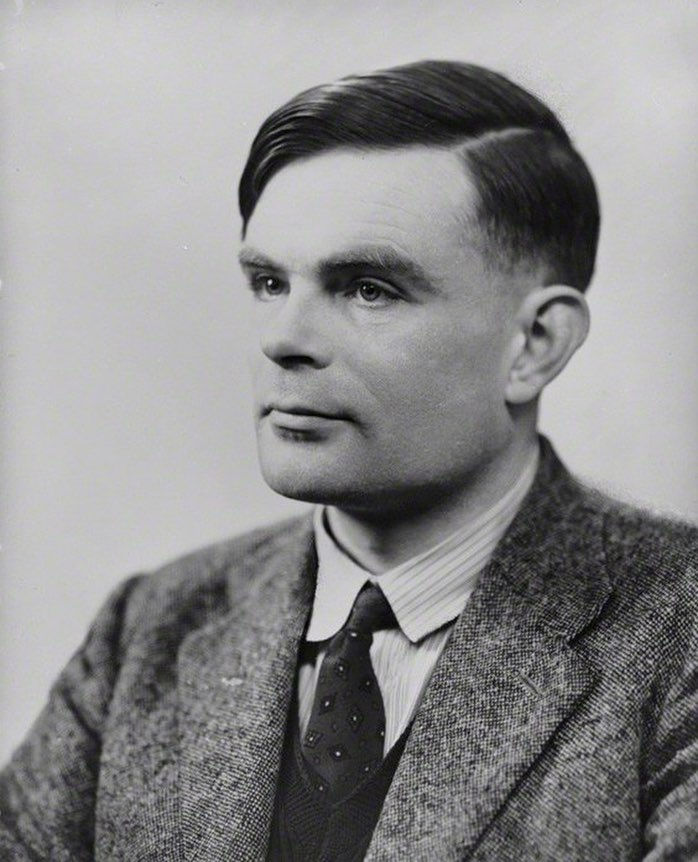

Seeds of Intelligence

A.I.’s journey began in the 1930s under the hands of Alan Turing. In 1935, Turing described the

concept of an abstract computing machine which consisted of a limitless memory. This machine would have a scanner that moves back and forth through the memory, reading what it finds and writing additional words which corresponded to the reading. This was Turing’s stored-program concept that would be universally known as the Turing machine (something all modern computers are based off.) However, it wasn’t until 1951 that the first earliest successful program was written. This program could complete a round of checkers in a reasonable time, a fascinating discovery for humanity. It wasn’t until 1966 that the first chatbot, ELIZA, was created. And it wasn’t until ChatGPT that AI fully exploded. On November 30th, 2022, the world woke up to the launch of ChatGPT, the first AI chatbot that was capable of ‘understanding’ and generating human-like texts. This was the dawn of a new era. The creation of the ‘new normal.’ Yet as this AI becomes more advanced and its database increases, it does beg the question… are we truly going to be replaced?

Bioinformatics: The Digital Microscope

What is bioinformatics? And why should we even care if AI takes over it or not? Bioinformatics is a field which focuses highly on programming and biology (it’s usually crowded with biologists who learned programming or mathematicians who learned biology). This interdisciplinary area involves processing huge amounts of biological data and making sense of them. This can include (but is not limited to) creating databases to store experimental data, predicting the way proteins fold, and modeling chemical reactions in the human body. There are four types of biological data that can be used in bioinformatics, which are transcriptomics, proteomics, phenomics, and chemoinformatics. All of these types can be used to answer most questions a lab may have, but what approach the lab may take would depend on the data. So why should we care if AI takes over bioinformatics? If AI were to take over the entirety of bioinformatics, it would re-shape the future of healthcare entirely. Furthermore, who would control and guide this AI? The government? A private firm? A bunch of researchers? Who would you truly trust with your DNA sequence?

So… can AI even take over Bioinformatics?

AI has been integrated into bioinformatics already--but it’s not going to replace bioinformaticians any time soon. AI has already been utilized in a wide range of contexts from genome sequencing, protein structure prediction, drug discovery, systems biology, personalized medicine, imaging, signal processing, and text mining. And throughout all of these processes, AI algorithms have shown outstanding effectiveness in solving complex biological problems ranging from decoding genetic sequences to even personalized healthcare (as stated in NLM’s "Artificial intelligence and bioinformatics: a journey from traditional techniques to smart approaches”). However, there are challenges that still remain despite AI’s tremendous potential. Currently, AI models’ interpretability and data quality has raised a red flag. Some of the decision-processes of AI can be difficult to understand or explain, and the biological data it often collected was noisy, incomplete, and heterogeneous (thus, making it difficult to combine datasets from different sources or experimental platforms.) On the less technical side of things, patients have raised privacy concerns over AI (and while techniques like differential privacy and homomorphic encryption are being explored) patients are less likely to give away their data unless they know for certain their data won’t be kept in the database. Thus, AI is a useful tool for bioinformaticians… for now. After all, the current job market for “Bioinformatics Scientists” is showing a 36% chance that it will go fully automated since August 20th, 2025. However, the statistic is based on the original Frey & Osborne study, which focused on susceptibility to computerization rather than an actual imminent automation. Thus, who would truly know the chance of the inevitable happening to the poor bioinformaticians? For all we know, they might be out of a job tomorrow.

What are the Potential Benefits of AI Taking Over Bioinformatics?

There are plenty of articles that argue AI is essential for bioinformatics (Biology Insights is one of them). They say AI offers a powerful solution by providing advanced capabilities in pattern recognition, data processing, and predictive modelling. This computational power allows researchers to accelerate their discoveries in biology and medicine. But outside of being faster than a human, what other qualities does this bioinformatic-geared AI models possess?

Increased Efficiency

According to Biology Insights, AI can accelerate the traditionally lengthy and super expensive process of drug discovery. The article states that it can identify potential drug targets more efficiently, which allows researchers to prioritize promising drug candidates and therefore significantly reducing the number of compounds that need to be synthesized and tested experimentally.

Increased Accuracy

Have you ever looked at a math problem and realized that you accidentally made the two negative instead of positive? Well, AI is certain to keep its problems free of errors (especially when it comes to genomic analysis and medicine applications). The next generation sequencing technologies are promising to produce massive datasets that require sophisticated computational analysis. AI algorithms have already made a significant improvement to the accuracy as well as speed of analysis, specifically in the process of reconstructing complete genomic sequences from shorter fragments. Furthermore, deep learning models can now identify patterns from sequencing data that resolve in complex regions with repetitive or structural sequences. An example of this is Google’s Deep Variant. The algorithm uses a convolutional neural network (similar to those used in image recognition to identify genetic variants) which significantly improved the accuracy than previous methods. Pair this with AI being able to predict a disease’s progression with higher accuracy by integrating diverse genomic information, and you have a convolutional neural network which can perfectly detect subtle changes years before symptoms of a disease can appear.

Enhanced Predictive Capabilities

As stated before, AI can make predictions about biological data way before a human can catch on to it. This is because AI utilizes a vast amount of data, thus it can detect the smallest of changes better than any human. In particular, AI systems are increasingly capable of predicting the functionality as well as the impact of genetic variants. Tools like Combined Annotation Dependent Depletion (CADD) use machine learning to integrate multiple sources of genomic annotations. Then, they predict the harmful effects of those genetic variants. These predictions are invaluable for studies on rare diseases or even cancer genomics. They’re so powerful that they might be able to create potential cures to such cancers and diseases!

Cost-Effectiveness

AI can help researchers avoid costly failures. This is particularly true in oncology and drugs. Having AI in drug development can predict potential drug interactions that a drug may have with the body or another drug itself. It might also predict potential harmful side effects, which would help healthcare workers and researchers avoid costly failures in later stages of drug developments. Furthermore, AI can help determine what treatments would work best on a tumor and can dramatically improve treatment outcomes while reducing unnecessary side effects and costs.

What are the potential Drawbacks of AI taking over Bioinformatics?

Data Quality

The data quality that a bioinformatic AI can produce can sometimes be subpar at best. According to fios Genomics, omics datasets are notoriously complex and prone to technical noise, batch effects, missing values, and inherent biological variability. Furthermore, patient data can quickly become outdated (often within a few months) which makes it harder for algorithms to predict future outcomes. If the medical records are incomplete and the data can change quickly, AI is certain to be inconsistent or contain errors. Even with perfect datasets, AI will always have gaps, which limits how much AI data can be used effectively.

Algorithmic Limitations

The lack of access to data makes ML and DL models need very large datasets to make accurate predictions. Patient records are considered private and HIPAA-protected, so unfortunately for AI, institutions are often unwilling to share them to be part of a large database which can be a security risk to those files. Ideally, ML algorithms would benefit the most from learning new information, but resistance within health organizations can slow this down. Furthermore, AI is also criticized for the “black-box problem” (aka being unable to explain how they reach their predictions) by healthcare workers. If the system were to legally defend its decision, it has no way to do so (and this is particularly important if the system gives the wrong recommendation for drugs or treatments). For AI to be trusted by patients, doctors, and researchers, these models need an explainable system that humans can interpret without “just because” arguments. Without an explainable algorithm, AI will not make it far in the bioinformatic or even the healthcare world.

Data Bias

Unfortunately, just like any other human on this planet, AI can be biased. AI outcomes can be distorted by bias in data that was used to train that particular model. For example, if minority groups are under-represented in a study or a medical dataset, the predictions may not work for them. Sure, this can be an easy fix by creating multi-ethnic training sets and maybe even designing models that are tailored to handle foreseen bias. However, what happens when these biases are not known or super obvious? What if a study tests a hundred women and just one happens to have cancer? What if only one of them has diabetes or Alzheimer's? Would the system still work on them? Or would the system fail them? Furthermore, (according to the “Drawbacks of Artificial Intelligence and Their Potential Solutions in the Healthcare Sector” published on the National Library of Medicine) it is still unclear whether these multi-ethnic training sets or designing AI models combating bias would even be effective in real-world settings.

Privacy and Security Risks

AI is quite the security and privacy risk. Medical records are private and sensitive. They contain information on your past, family, and social history. They contain diagnoses, prescribed medications and treatments, as well as lab results. They even have hospital admissions, billing information, insurance requests and response, authorization like medical power of an attorney, your organ donations status, and records shared by other healthcare providers. Would you truly want to share this data with an AI? With the possibility of a hacker getting into it? Probably not, which is why many healthcare providers are serious about protecting your information and very anti-AI.

Ethical Concerns

Artificial intelligence has faced ethical concerns since it was first imagined. After all, making an AI which utilizes tons and tons of data and may potentially take over your job isn’t the greatest way to convince people to give it a try. However, the biggest issue AI faces is accountability. This is a huge issue in healthcare. HUGE. After all, who is supposed to take the blame when the AI gives the wrong treatment or medication. The person who made the algorithm? The hospital who administered the AI? Or the person who put in the patient’s data? This is, of course, not as big of an issue if the problem is low-stakes, but if AI advises wrongly in a life or death situation things can get… tricky. After all, it is not clear who should be blamed when errors occur. Bioinformaticians may not be responsible since they didn’t build or control how the AI made the drug, but blaming the developers may not fully make sense either. Because of this, many regions like China had banned the use of AI in ethical medical decisions. Not only was such a choice made because AI lacks a universal ethical guideline, but because potential outcomes could be hazardous for the patients as well.

Conclusion

So, can AI ever take on bioinformatics? While there are many reasons why AI can fail spectacularly in the field of bioinformatics, there are also various reasons why it might succeed. The effectiveness of AI models relies on the quality and diversity of biological datasets used for training. High quality data, free from errors and biases, ensures that AI learns accurate patterns and relationships within the biological systems. Rigorous testing of AI predictions are a necessity to ensure that the AI algorithm is accurate and reliable in real-world biological and clinical applications. Without amazing data and rigorous testing, the future of AI in bioinformatics is set to be over. And until human curiosity and need for cures desists, we can guarantee that the likelihood of AI becoming extinct from this field will truly be unlikely.

Sources:

Be sure to check out other STEM·E content!

Comments